- Causal Inference in Statistics

- Posts

- Statistics Challenge + Book Writing Updates (and Memes)

Statistics Challenge + Book Writing Updates (and Memes)

Explore the latest in causal inference. Collaborate, learn, and grow with thousands of fellow learners

[3-Day Stats Challenge] Why Most Test-Picking Rules Are Wrong—and What to Do About It

Statistics is often taught using decision trees, flowcharts, and test-picking rules. Unfortunately, professional statisticians in industry and academia do not work like this—statistical reasoning is simply not based on linear decision-making.

Want to learn how to develop a professional statistician’s frame of mind?

Alex Molak and I are holding a free 3-day biostatistics challenge to do just that—and you’re invited!

When: Feb 25-26-27, from 12-1:30PM, Eastern Time (6-7:30PM CET and 9-10:30AM Pacific)

Who: Anybody interested in statistics, from beginners to the more experienced

What: Lectures, Q&A, and a statistics challenge with a walkthrough to go over important aspects

Where: Join the mailing list to receive the exclusive Zoom code

[Day 1] Rethinking the Problem

All Models Are Wrong—But Some Are Useful

Modeling Starts Earlier Than Most People Think

Before We Test: Are We Answering the Right Question?

[Day 2] Design Beats Analysis

Is Design More Important Than Analysis?

Case Study

Student Task

[Day 3] From Struggle to Structure

Common approaches and pitfalls in accomplishing the task

Solution Walkthrough

Generalizing the Approach

Applying the framework to other problems

Special Offer

Q&A

What's The Plan?

[NEW OFFER] Training and Workshops

In 2025, my discussions with dozens of practitioners from industry—medical devices, biotech, and pharma—taught me two things.

There is a shortage of skill in industry, specifically for causal inference for real-world evidence.

My consulting experience in a diverse set of applications together with my university teacher experience make me a unique trainer.

I began offering training and workshops in causal inference, real-world evidence, and biostatistics.

My approach is engaging, warm, and thorough. I can even leave your company with a rich set of educational material (videos, notes, Q&As, etc.) for continuous improvement of new cohorts.

The response has been overwhelming and I’ve since trained

Pharma RWE teams

Industry scientists in biotech

Research Labs

And more!

Ready to discuss how I can help?

I’m almost fully-booked, but could take on an additional workshop or training in 2026.

[BOOK WRITING UPDATES]

Chapter 5 was released in 2025.

Chapters 4 and 7 are both about halfway done. Together, Chapters 1-5 and 7 represent over 65% of the book—the finish is close!

If you pre-order my Causal Inference in Statistics, with Exercises, Practice Projects, and R/Python Code Notebooks, you now receive:

A 230 page PDF with the 4 chapters

An online version with the 4 chapters (see video below)

6 R code notebooks

Receive the new parts of the book upon release (2 new chapters + 5 additional notebooks, coming soon) and any updates to the material via Github

A pre-order for the physical book once it is complete

Writing this book has been a tremendous challenge: balancing client needs in consulting work, my personal obstacles (2025 was not only hectic for geopolitics, my personal life was also), and actually writing a quality book, have been at the core of my professional activities.

I say this to say how thankful I am for your support 💜

You can order now—you will be the first to receive the new material when it launches 😋

A Book I’ve Read Recently

A Technical Textbook

Assumptions are tools to help us deduce A from B.

In causal inference, we use causal assumptions (e.g. no confounding) to link the observed reality of the data (i.e. correlation) with the unobservable counterfactual reality we care about (i.e. causality).

Often, assumptions are taught as if they have to be true. Of course, in a strict sense, this is right: the theory falls apart when the assumptions aren't true, since it relies on mathematical theorems.

In short, with the right assumptions, we can say that correlation IS causation, thanks to the power of mathematics.

Yet in reality, it is impossible for these assumptions to be true. For example, the complexity of the world forbids us from measuring every single confounder accurately (let alone model them correctly).

Any experienced practitioner will know that they can get away with this state affairs: they can judge with some confidence when and how their methods hold up even when their assumptions are not met.

And the best of the best will not only try to convince you that their judgment is sound: they will show you!

Methods from the family of sensitivity analysis, and what epidemiologists call Quantitative Bias Analysis (QBA), are ways to quantify the potential bias in your results due to unmet causal assumptions.

In this day and age, any serious causal study SHOULD be using such methods—it is no longer sufficient to estimate a quantity and hope for the best.

Books like this one formalize these approaches.

Chapter 5 of my Causal Inference in Statistics book covers QBA—it’s available right now.

[PODCAST] Live Events For 2026

I’ve done many live events over the year, all available on Youtube. I’m kicking off 2026 with an exciting guest to be announced soon.

Any guests you would love to see? Let your imagination run wild, and let me know!

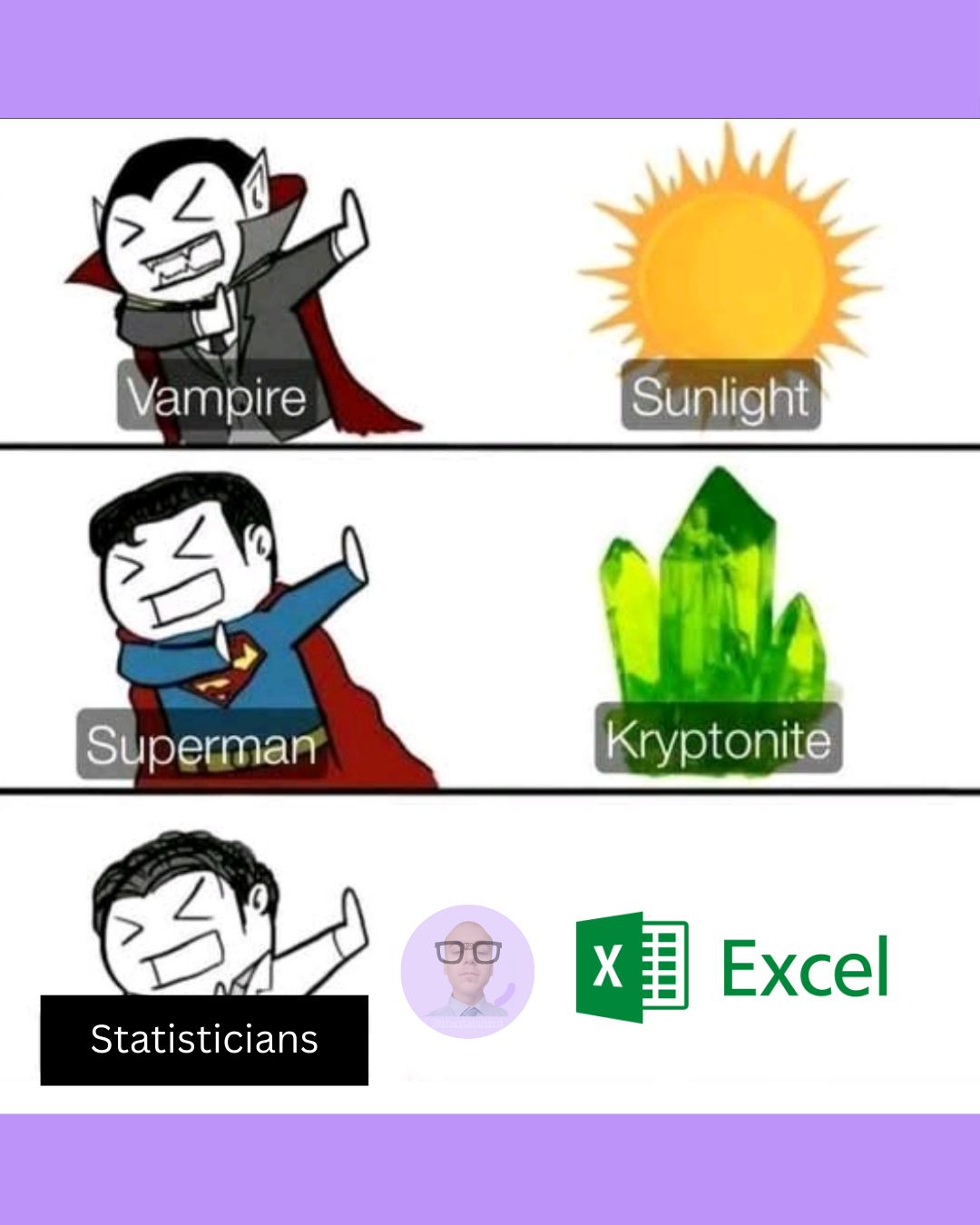

[MEMES] Felt Frisky For February

I saved the best for last.

I Must Admit I Use Excel, Lowkey Microsoft’s Best Product (And I Hate It)

I Really Don’t Like Excel For Statistics—And You Shouldn’t Either

Don’t Be Like Mr. Incredible

Until next time.

thestatsnerd,

Justin

Reply